Exploring challenges in simulation verification

With simulations constantly receiving attention to support decisions and develop strategies in response to events such as the ongoing COVID-19 pandemic, people frequently ask how they can be expected to trust findings obtained from a simulation. Many factors play into the question of credibility including model assumptions, the use of data, calibration, to name a few. Many people don’t generally know how a simulation is expected to work, i.e. characteristics of COVID-19 spreads, or how to properly interpret the outcomes. To address such concerns, simulation modelers refer to the verification and validation (V&V) of models.

To explore the first V of V&V, my collaborators at Old Dominion University’s Virginia Modeling, Analysis and Simulation Center and myself have published a PLOS ONE article that presents our exploratory content analysis using over 4,000 simulation papers published from 1963-2015. See the abstract and reference below for details.

Abstract

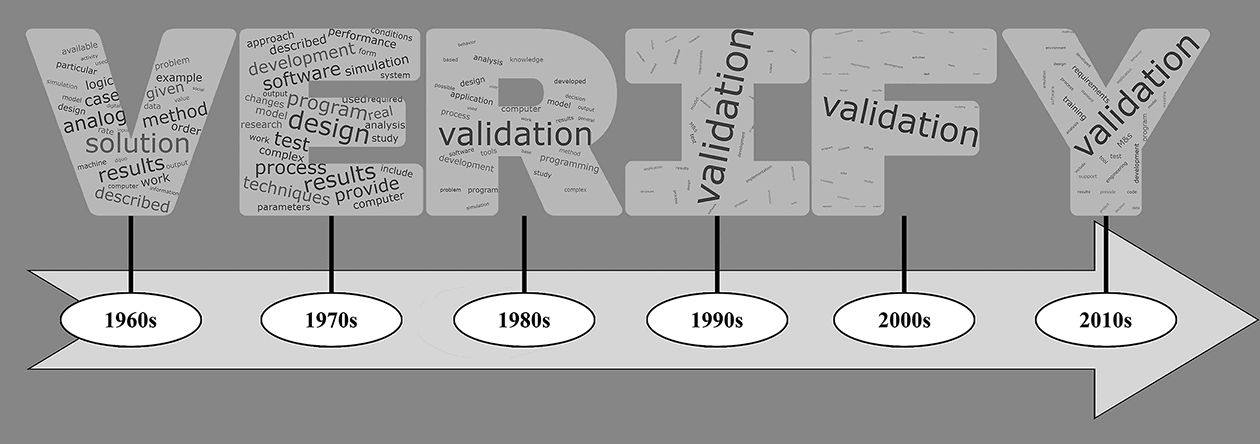

Verification is a crucial process to facilitate the identification and removal of errors within simulations. This study explores semantic changes to the concept of simulation verification over the past six decades using a data-supported, automated content analysis approach. We collect and utilize a corpus of 4,047 peer-reviewed Modeling and Simulation (M&S) publications dealing with a wide range of studies of simulation verification from 1963 to 2015. We group the selected papers by decade of publication to provide insights and explore the corpus from four perspectives: (i) the positioning of prominent concepts across the corpus as a whole; (ii) a comparison of the prominence of verification, validation, and Verification and Validation (V&V) as separate concepts; (iii) the positioning of the concepts specifically associated with verification; and (iv) an evaluation of verification’s defining characteristics within each decade. Our analysis reveals unique characterizations of verification in each decade. The insights gathered helped to identify and discuss three categories of verification challenges as avenues of future research, awareness, and understanding for researchers, students, and practitioners. These categories include conveying confidence and maintaining ease of use; techniques’ coverage abilities for handling increasing simulation complexities; and new ways to provide error feedback to model users.

Reference

A content analysis-based approach to explore simulation verification and identify its current challenges

C.J. Lynch, S.Y. Diallo, H. Kavak, J.J. Padilla

PLOS One, 2020, doi:10.1371/journal.pone.0232929

[Paper]